Test and optimize AI workloads on real infrastructure with full control and full privacy.

Unlike hosted playgrounds or generic sandboxes, the Saptiva Lab runs on real infrastructure, with the same orchestration, isolation, and compliance guarantees as your production deployments.

Run evaluations with your own data, simulate complex workflows, compare model behavior, and prepare agents for production.

Do it all without exposing sensitive assets or compromising security.

Saptiva Lab lets you test models, agents, and pipelines in conditions that match production, using your own data, full observability, and infrastructure you control.

Launch Saptiva Lab

Compare open and proprietary models such as LLaMA, Mistral, Claude, and DeepSeek using real metrics, latency measurements, accuracy evaluations, and cost insights.

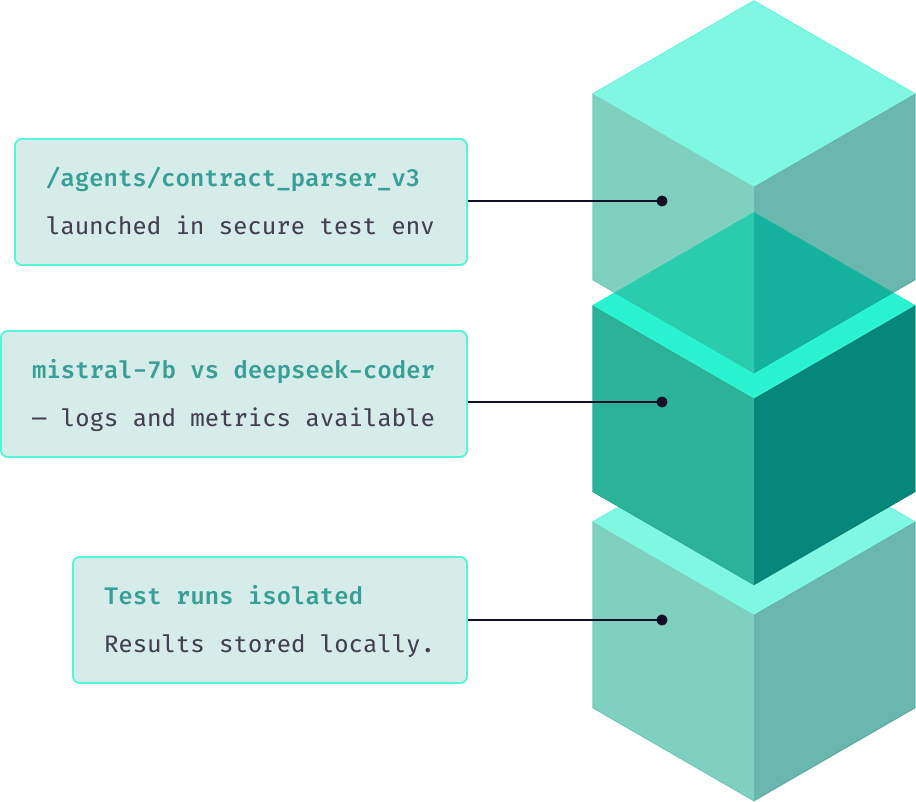

Simulate multi-step reasoning, data retrieval, and document interaction with agents running entirely inside your controlled environment.

Run private inference using your real datasets without exposing inputs or outputs to external systems.

Tune vector stores, scoring strategies, and retrieval performance under real-world constraints.

Use real infrastructure with no commitment, no data exit, and full transparency at every step.

Saptiva Lab provides your technical team with a fully isolated and secure environment to build, test, and deploy AI workloads. You get real-time observability, fine-grained access controls, and complete developer tooling out of the box.

Each Lab instance runs in a private environment across cloud, on-prem, hybrid, or air-gapped setups.

Monitor latency, cost, and model behavior with built-in logs, traces, and metrics for full transparency.

Use CLIs, SDKs, and APIs to orchestrate models, agents, and workflows with complete control and no black boxes.